Autonomous Car Development

| ✅ Paper Type: Free Essay | ✅ Subject: Engineering |

| ✅ Wordcount: 3251 words | ✅ Published: 03 Nov 2020 |

Abstract:

This article elucidates self-driving robotic technology used in cars and also explains about how DARPA – (Defence Advanced Research Project Agency) have induced a significant innovation in technology for robotic cars. It also reviews the technologies used in the autonomous cars and also discusses the inaccuracies and obstacles faced during the various challenges.

Introduction:

The invention of autonomous cars has influenced the automobile industry in the 20th century. In today’s life, most of the people use cars as daily transportation. However, the inefficiency faced by cars can cost us thousands of lives, in millions of accidents and billions of human hours wasted in busy streets resulting in the wastage of billions of gallons of fuels. These reviews on advances in different technology can help in creation of more efficient autonomous driving vehicles, which can reduce the amount of accidents and the traffic jams, thus curing all the problems related to clogged roads. So, this can help in building more reliable, efficient and safe face of autonomous transportation.

Evaluation Of The Field (Past) – Technologies:

The first GRAND challenge conducted for unmanned robotic cars in 2003 to navigate 142 miles had failed within the first few miles and it proved to be that technology was not prepared for the prime time.

In 2005, DARPA repeated a “GRAND Challenge” covering 132 miles through mountains and dry lake beds with “Stanley’s Stanford” clamming the first place. In 2007 DARPA came up with a new competition named “Urban challenge” where the conventional vehicles had to navigate a road maze by following the Californian traffic rules. At the end of the Urban challenge, Junior robot claimed the second place. These challenges were the milestones in the field of robotics as there were innovations in each stage where the robot was able to make decisions at unpredictable situations.

Figure 3 Junior, runner up (Thrun, 2010)

Figure 2 CMU’s Boss, winner of the urban challenge (Thrun, 2010)

Figure 1 Stanford’s robot Stanley, winner of Grand challenge (Thrun, 2010)

Technology (Stanley Vs Junior):

Vehicle Hardware:

The robot’s Stanley and Junior shown in (figure 2 and 3) are based on Volkswagen Touareg and Passat Wagon. Linux based computer system through CAN bus interface is used to compute vehicle’s data.

- Stanley: A colour camera and five scanning laser range finders are mounted on roof of the vehicle in point forward direction to recognise the driving direction of the vehicle and the road. Stanley employs “Six Pentium M blades” connected via Gigabit Ethernet.

- Junior: It utilises primary sensor to scan the environment and five altered laser range finders. Junior uses two “Intel quad cores” for computer.

Both the vehicles have similar concept and all sensors are mounted on roof top to detect long range obstacles, to uphold the antenna for GPS (Inertial Navigation System – INS) and radar sensors. The divergence in Junior’s sensor is, it can point in all directions whereas Stanley can sense only in point forward direction. This addresses that Junior robot must be aware of traffic in all directions.

Software Architecture:

It is considered to be the key technology in the autonomous system. Software systems used in DARPA challenge winner cars were modular, which work in pipeline system to convert the data from sensors into controls of actuators. It generally takes care of three main functional areas:

- Perception: It maps sensor data into the predictions about environment.

- Planning: It makes driving decisions.

- Control: It Actuates vehicular controls based on the commands generated (throttle, steering wheels etc).

Both the vehicles have a modular architecture which makes the software more flexible in situations where the actual process time is unknown. This module also reduces the reaction time for the new sensor data which is approximately 300ms for both the vehicles.

Sensor Pre-processing:

Both the vehicles require data pre-processing and fusion in the early stage where the most common fusion occurs in the pose estimation of the vehicles which helps to send the coordinates, velocity and orientation (yaw, roll and pitch). Kalman filters are used to embed inertial parameters, wheel odometry and GPS measurements.

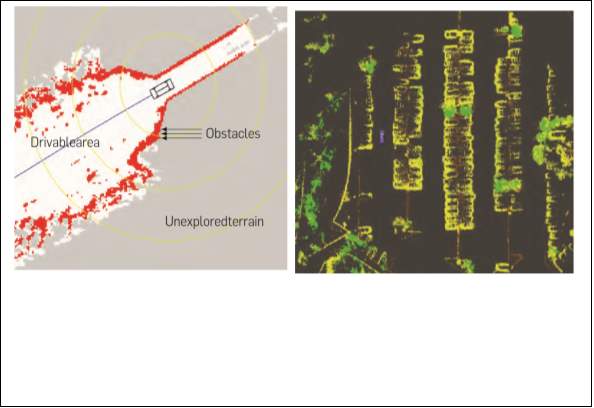

- Stanley: Pre-processing is required for the environment sensor data. This helps in creating a 3D point cloud and converting into 2D maps. These 2D maps show the probability of the presence of a vertical obstacle. Layman’s term, it differentiates the surrounding into drivable and non drivable terrain, based on the Data received from single or multiple sensors.

Adaptive vision

Figure 4 Resulting point cloud for vertical obstacles and adaptive vision (Thrun, 2010)

Fusion of multiple sensors is considered to be the most innovative element in autonomous vehicles. However, Stanley could recognise an obstacle only at the range of 26m. To decipher this, “Adaptive vision” can be used to implement image processing techniques to differentiate similar texture in the image. It also enhances obstacle detection range up to 200m which could help Stanley to drive safely.

- Junior: It also uses similar analysis where the adjacent scan lines determine small obstacle like curbs. 3D scan lines are obtained from laser range finer.

Localisation:

It creates a link between estimated INS pose (co-ordinates, orientation and velocity of the vehicle) and the map. The error in INS estimation can be a meter or more which is not acceptable in real world. Hence, this makes localisation a necessary factor in making the robotic car safe and thus preventing potential obstacles.

- Stanley: It could only address the lateral location of the vehicle by analysing a discrete set of vertical offsets with respect to the map. Hence, localisation helps in adjusting the INS pose so that the central line of the map coincides the drivable corridor. As an outcome, the robot was able to stay centered on the road.

- Junior: It uses similar pose estimation method and additionally using infrared remission values (if needed) obtained from the laser as it cannot acquire lane markings within the range-based features. To reduce minute GPS error, they combined INS and remission value which also helped in determining the posterior distribution over lateral offset to the map.

Figure 5 Posterior lateral position indicated by yellow graph from Junior (Thrun, 2010)

Figure 6 Stanley’s localisation using momentary sensor

(Thrun, 2010)

Obstacle Tracking:

There are two type of obstacles that are encountered by the robotic system: Static and Dynamic. Static obstacles are either stone or parked cars, whereas dynamic obstacles are the moving ones. The static obstacles can be mapped using grid maps.

Figure 8 Stanley’s obstacle mapping (Thrun, 2010)

White area indicates the terrain, dark grey area determines the obstacles.

Figure 7 Junior distinguishing curbs and vertical obstacles (Thrun, 2010)

The dynamic obstacles require temporal differencing, where two consecutive laser scans are compared for objects present in one and absent in the other, potentially giving us the moving object. As this extent, Junior robot comprises of three radar detectors, two pointing on the sides and one pointing in forward direction to enhance the reliability of the vehicle in moving in traffic.

Path Planning:

It helps in making decisions while driving which involves navigating the car along the road based on upcoming threats and mapping of the road. It gives out the information “Where to move on the road?” or “What to do if a threat is encountered?” for the robotic car. This helps in a safe, smart and smooth journey.

- Stanley: It used a basic planning technique in grand challenge which rolls out multiple trajectories to minimise the consequence of collision. However, this method helps the vehicle to choose the closer periphery on the road.

- Junior: It works with similar idea but the vehicles were able to choose their own path as the urban challenge was extensively demanding. Shortest path planner was used to estimate the drive duration to the goal time from the current location of the vehicle.

On the flipside, momentary traffic situations do not permit this dynamic method so Junior considered discrete decisions like lane changing and U-Turns to make the robot react quickly.

Junior operates with an algorithm to find the shortest path by scanning the trees in case of unstructured navigation. By finding the continuous way points, the robot reacts rapidly to find the resulting path in parking lots.

Figure 9 Juniors algorithm for search tree and resulting path (Thrun, 2010)

Behaviours And Control:

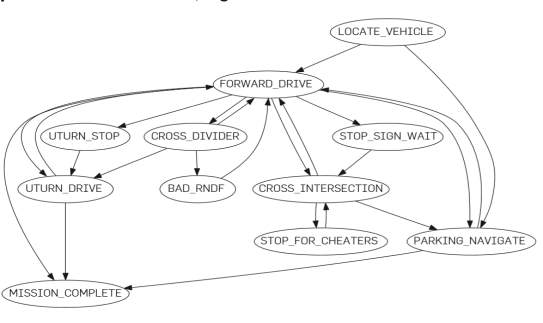

The behavioural module helps in foreseeing the situations that can’t be predicted based on standard deduction of data received by sensors. One such example of situation is predicting potential traffic jams to try and avoid any further congestion by using alternate way through that path. Junior invoked an algorithm to find an admissible route to its destination which proved the ability of the vehicle to succeed in the urban challenge. Its behaviour module is implemented using finite state machine to not leave the robot idle in hypothetical situations and also to follow the legal traffic rules.

Figure 10 Finite state machine of Junior robot

Control takes care of the actual controls of the vehicle itself. It makes sure that all controls are functioning correctly based on the inputs given for achieving the desired output. Multiple PID controls were used to adjust the steering speed along the destined path. Speed limits, curvature path’s speed time, back sliding manoeuvre and other controllers are calculated and executed in this module.

To conclude, each new challenge of DARPA helped the robots to come up with new technologies and by doing so, future cars will be more convenient, both legally and socially. The major key challenge for the robots is ‘low frequency’ change when new roads are built, lane changes take place and when roads are blocked. The robot cannot react adequately but in future it can be leveraged by adding modules that could help the robot to take decisions like humans. from several experiments it has proven that robotic technologies have more advantages than human driving. However, it can never replace human intelligence, especially before abhorrent circumstances.

Stages of technology development:

The intervention of autonomous cars has enormous societal benefits which can be reaped by deploying this technology in the trading place.

Present:

Since many years, lots of people are focused on autonomous vehicles to give rest to human drivers. In the subsequent decades, various companies were launching autonomous vehicles with various technologies to have comfortable transportations and few are listed below,

- United States of America, 2017: Vehicle to vehicle technology to avoid collision in autonomous vehicles.

- Tesla, 2019: Autonomous cars with autopilot features to drive semi-autonomously by using artificial intelligence.

- Google, 2018: Self driving cars to perform all safety functions including parking

- Nissan, 2018: Manoeuvring and ACC (Adaptive Cruise Control) features

By the end of 2020, the era of autonomous cars would begin to change the life of customers and the automotive industry.

However, according to my perception, the most famous contribution in robotics cars is the one done by Google, the vast network which has been developing autonomous cars since 2010 and is still making innovations to design a new level of change to the automobile industry.

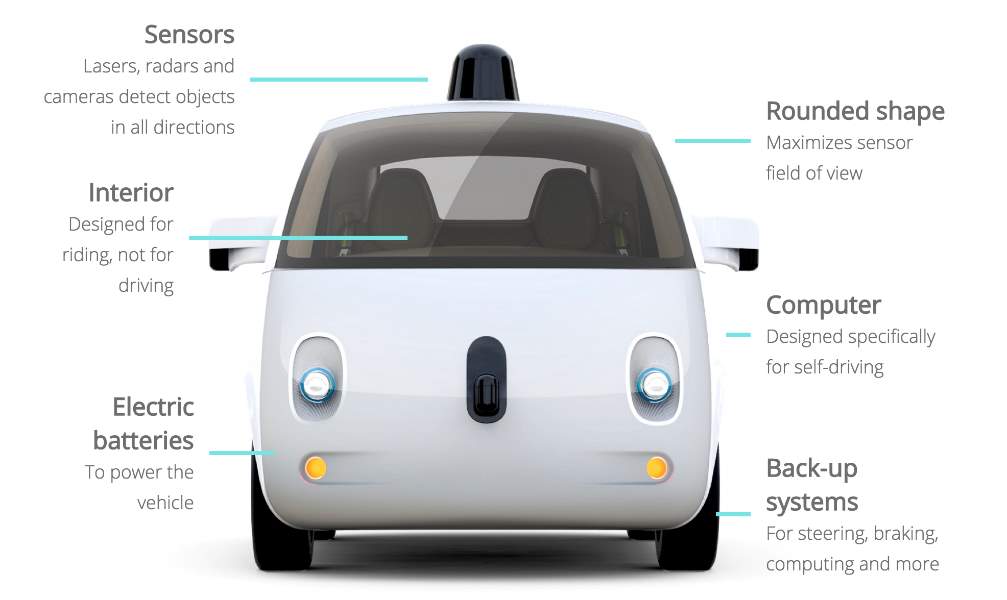

Figure 5 prototype of Google’s unmanned car (9to5google, 2015)

Google’s unmanned cars are guided by Sebastian Thrun who also did the project on “Toward robotic cars”. He explains,

- Google’s car can control the steering and speed limits by itself while detecting obstacles. Go and Stop function in traffic congestions are proceeded automatically.

- It is integrated with hardware sensors, artificial intelligence and google maps to execute the driving functions.

- The important aspect of Google’s car is, it does not have wheel for steering, accelerator, break because the system comprises of start, stop, pullover buttons and a GPS with computerised screen to provide satellite locations from a database.

- Hardware sensors are LiDAR, Position estimator, distance sensor etc, used to obtain the real time environmental conditions

Once the information is collected, destination will be set by the driver and the software of the car will calculate a route and desired reach time. LiDAR, mounted on the car roof will observe the range of surrounding cars and develop rudimentary details on 3D map. To determine the position of the vehicle, ultrasonic sensor is used which also determines the distance between obstacles.

The software surface installed in Google’s car gives advanced notification of factors like traffic signals and lane changes. In adverse situations, humans can take control of vehicle by the override function.

Future development of this field

Park assistant: The future autonomous vehicles will automatically steer the vehicle into bay parking and parallel parking spaces. The park assistant will guide the user by calibrating the parking space, steering and reverse control in order to reach the ideal line. The only task for the driver is to keep control of the brake and accelerator and retain all the controls.

ACC with stop and go function: Adaptive cruise control is already implemented in current vehicles like Audi A3, Volvo S60 etc. However, it can only maintain distance between vehicles to avoid collision. By implementing stop and go function, the vehicle will have control in distance, particularly in a slow-moving traffic and congestion which also governs the brake and acceleration.

Lane keeping assist (LKA): It will assist the driver to stay on a specific speed normally around 70km/h. If the vehicle drifts off from the lane, LKA will detect the scan line and the vehicle position to obtain corrective actions.

The future advancement,

Military applications: In war field autonomous vehicles can be used for automatic navigation using and real time decision making.

Taxi service: It is solely based on picking up or driving someone who does not own a car. Since Taxis drive around the cities everywhere, there be a place for autonomous vehicles to dispatch objects or pick up people. In busy areas, people can easily request a car and reach their destination without being delayed.

Figure 6 Volvo XC90s first self-driving car tested for Taxi (BloombergBusinessweek, 2016)

Many public transports are controlled by human operator, however the tasks will vary depending on the system. If it’s a rail-based transportation, the only process involved is accelerating and decelerating but more conscious about lane changing. Whereas, in a shuttle or a bus, the operator has to be more concerned about the traffic rules, pedestrians, partner drivers, bus lanes and stations. These are several tasks that the operator has to react to, control and handle all the situation simultaneously. So, implementing autonomous vehicles will improve the system’s progress by performing all the tasks.

Social, legal and ethical impact:

- Safety: In public transportation, safety issue has a major impact on people’s life as traffic accidents have a negative impact on today’s economy. For this reason, most of the researches in autonomous vehicles focus on acquiring safety systems. Implementing this in future will minimise 90% of the accidents caused due to human error.

- According to EUROSTAT, after implementing intelligent systems in autonomous vehicles have reduced from 56,809 to 28,849 people in the year 2010 (i.e. 30% of reduction) compared to the previous system. (Jagtap, 2015)

- Traffic impacts: Introducing autonomous vehicles would drastically change the traffic flow. Initial stage of implementation will affect the highways and lane changes as there would be both human controlled and automatically driven vehicles. This could cause some problems which could concern the reaction of the motorist to the autonomous vehicles and also integrating the traffic flow with the unmanned vehicles would be chaotic. On the other side, the autonomous vehicles should be able to follow the traffic laws whereas the human drivers have an option to break the law. Over the time, autonomous vehicles will be common transport which would create less congestion in the traffic by allowing the vehicles to merge quickly and exit the highways easily. As a result, there could be chance for an economic improvement.

Economic and industrial impact:

- Fuel economy: Autonomous vehicles will not require efficient speeding up and braking. So, it could achieve optimum performance by improving the fuel efficiency. Even if it results in 1% of change in the economy, this has saved billions of dollars in the United States. Implementing autonomous systems will save 25% of the fuel compared to human controlled vehicles.

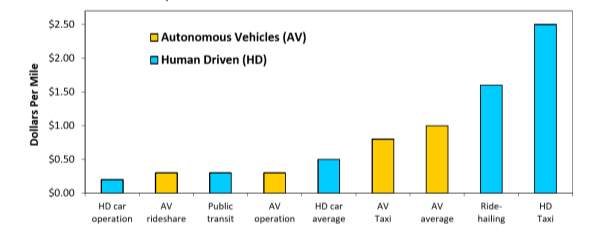

Cost comparison

The below graph elucidates that autonomous vehicles (AV) cost less compared to the human driven (HD) taxi’s and also with public transit

Figure 7 Graph of cost comparison (9to5google, 2015)

On the flipside, personal autonomous vehicles will be expensive than HD car operation, but AV rideshare will be less expensive compared to the (HD)-ride hailing. If the availability of (AV)’s increases, there will be more demand and also car ownerships will be reduced. Hence, AV’s will provide major savings to the commercial transports like buses and shuttle.

The technologies and equipment used in autonomous vehicles are GPS module, LiDAR, high resolution camera, position sensor etc, which are expensive and considered to be one of the demerits.

Environmental impact:

Most of the unmanned cars are completely electric, they significantly requires less amount of energy and gas. Only if the car’s battery is completely cleaned out, it indirectly leads to emissions. However, it results in less air pollutions and also battery power is consumed when it’s static.

Smart traffic light: Autonomous intersection in traffic flow will open up new prospectus for the cities which focuses more on green space and pedestrians. Traffic lights will not be required for autonomous vehicles as its intersection merges with the traffic flow

Figure 8 Autonomous and traditional traffic flow (9to5google, 2015)

The picture elucidates that traditional intersections have more traffic indicated in “Red”

By implementing this, getting out in cities will be easier for many people. Getting in and out of the city will be easier with steady traffic flows and therefore eliminating the need for people to live downtown.

Challenges, dangers and opportunities:

- Malicious Hacking: Crime or hacking can easily manipulate self – driving cars.

- Platooning risk: Even though autonomous vehicles have potential benefits in reducing congestion, it requires platooning where vehicles driving close together in the high ways can cause a new risk, as human drivers crashes severity.

- Software and hardware failure: Vehicles with complex electronic system will often have failure even with a small system failure like interference, software error etc. Even a small calibration error will end in a catastrophic result. However, the question is how frequently crashes happen compared to human operators.

- Non – auto travellers: Complexity in developing communication and accommodating pedestrians and motorists.

The design and implementation of the complex artificial intelligence software into the autonomous vehicle is complicated as it is the decision making (brain) of the system. Since the system is based on complete artificial intelligence, the system will be affected if the present road conditions vary. The robots will not be able make decisions like human during non-ideal conditions. Taking a driver in snow will require a proficient skill to drive especially at lane markings at snowy roads. More computer vision algorithm has to be updated into AI system to interpret human skills into autonomous vehicles.

Conclusions:

Presently, there are many systems with ACC control, autopilot, GPS, lane changing assistant and steering control system. To make a combined system with all these, technologies will tend to conceive a full autonomous system. However, the objective is to win the trust of the people to let a computer drive the vehicle. This can be only solved by several testing and researches to get assurance for the final product. Implementing autonomous vehicle will not be accepted instantly, but people will understand the benefit of the system in overtime.

References

- 9to5google. (2015, June 5). Retrieved from https://ww.9to5google.com/2015/06/05/google-self-driving-cars-accidents-report/

- Bimbraw, K. (2015). Autonomous Cars: Past, Present and Future, 1-9.

- BloombergBusinessweek. (2016, August 18). Retrieved from https://www.bloomberg.com/news/features/2016-08-18/uber-s-first-self-driving-fleet-arrives-in-pittsburgh-this-month-is06r7on

- Jagtap, G. D. (2015). GOOGLE DRIVERLESS CAR, 10-39.

- Thrun, S. (2010). Toward robotic car, 99-106.

Cite This Work

To export a reference to this article please select a referencing stye below:

Related Services

View allDMCA / Removal Request

If you are the original writer of this essay and no longer wish to have your work published on UKEssays.com then please click the following link to email our support team:

Request essay removal